# Configure Google Cloud Storage as your data pipeline source

Set up Google Cloud Storage as a data pipeline source to extract and sync records into your destination. This guide includes connection setup, pipeline configuration, and key behavior for working with .csv files stored in GCS buckets.

# Features supported

The following features are supported when using Google Cloud Storage as a data pipeline source:

- Extract and sync data from

.csvfiles in GCS buckets - Support for full and incremental sync through file detection

- Field‑level selection for data extraction

- Schema drift detection and handling

- Field‑level data masking

# Prerequisites

You must have the following configuration and access:

- A Google Cloud account with access to storage buckets that contain

.csvfiles - A Google service account with permissions to list and read objects

- Folder paths and file patterns for the files to sync

# Connect to Google Cloud Storage

Complete the following steps to connect to Google Cloud Storage as a data pipeline source. This connection allows the pipeline to extract and sync records from your storage buckets.

Refer to How to connect to Google Cloud Storage to learn how to create a Google service account and download the private key.

Connect to Google Cloud Storage

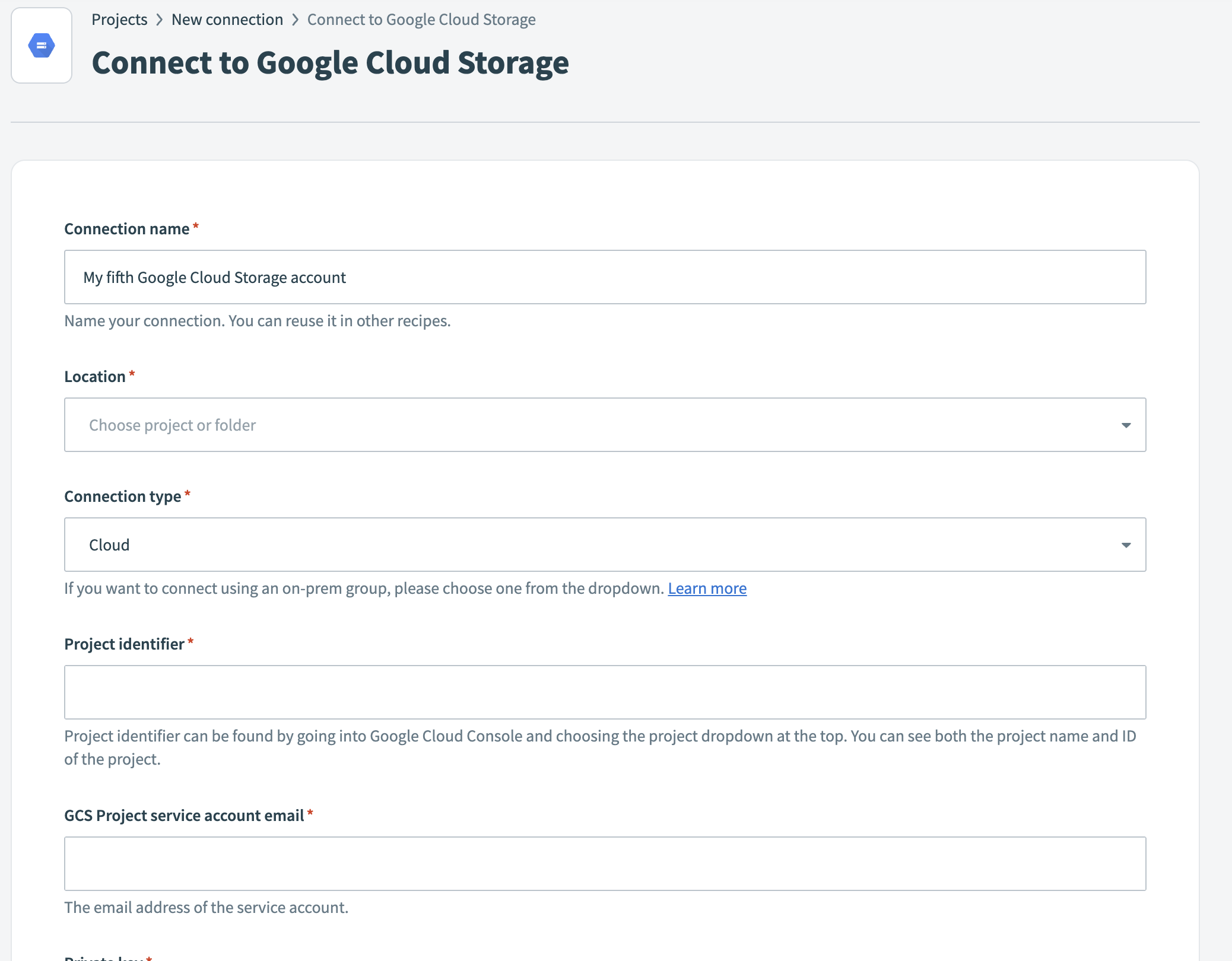

Select Create > Connection.

Search for and select Google Cloud Storage on the New connection page.

Enter a name in the Connection name field.

Google Cloud Storage

Google Cloud Storage

Use the Location drop-down to select the project where you plan to store the connection.

Select Cloud in the Connection type field, unless you need to connect through an on-prem group.

Enter your Google Cloud Project identifier. You can find this in the Google Cloud Console.

Enter the GCS Project service account email. This is the email associated with your Google service account.

Paste the Private key from your service account JSON file. You must include the full key, from -----BEGIN PRIVATE KEY----- to -----END PRIVATE KEY-----.

Optional. Use the Restrict to bucket field to limit access to specific buckets. Enter a comma-separated list such as bucket-1,bucket-2.

Optional. Expand Advanced settings and select Requested permissions (OAuth scopes).

Click Sign in with Google to authenticate and complete the connection setup.

# Configure the pipeline

Complete the following steps to configure Google Cloud Storage as your data pipeline source:

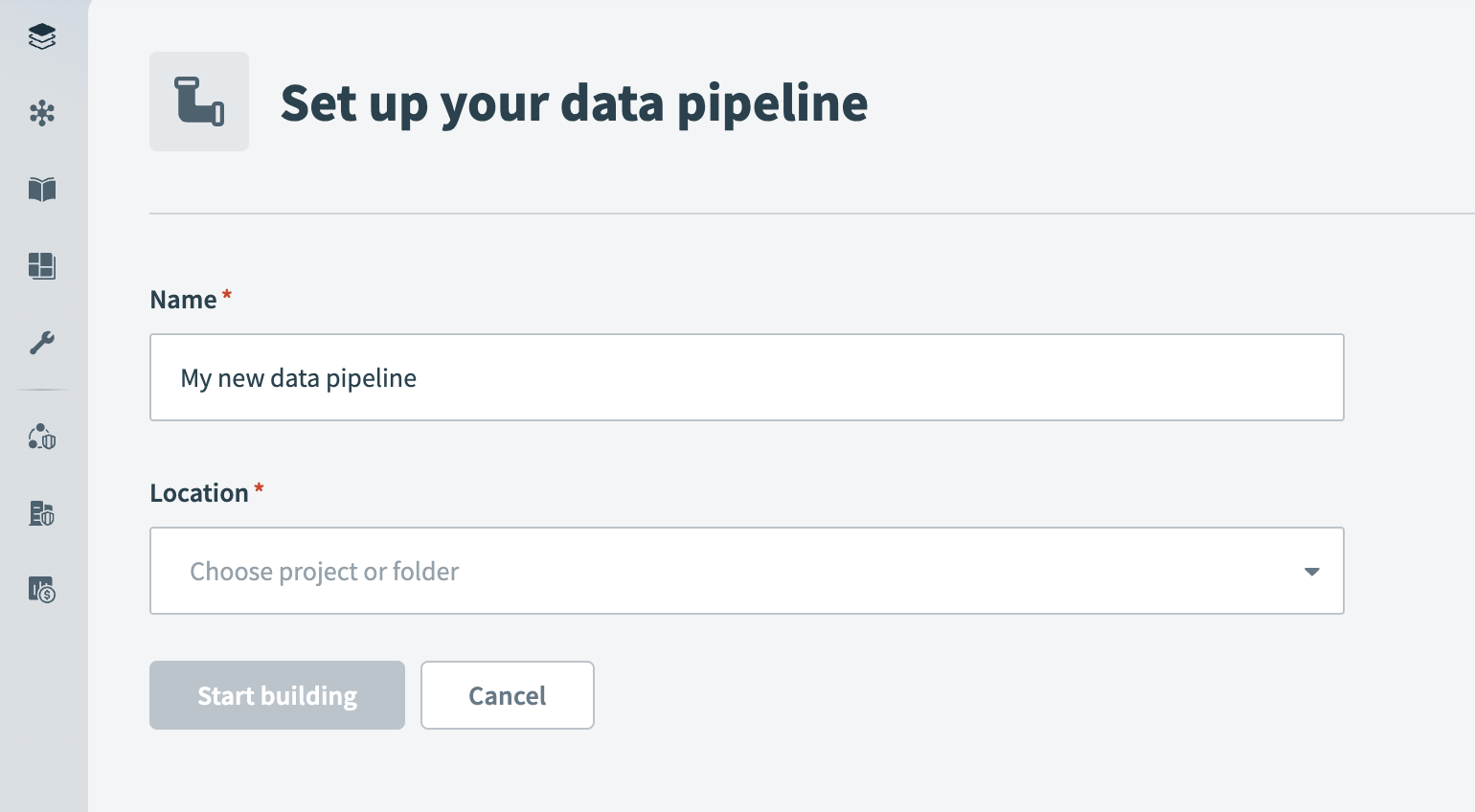

Select Create > Data pipeline.

Provide a Name for the data pipeline.

Data pipeline setup

Data pipeline setup

Use the Location drop-down menu to select the project where you plan to store the data pipeline.

Select Start building.

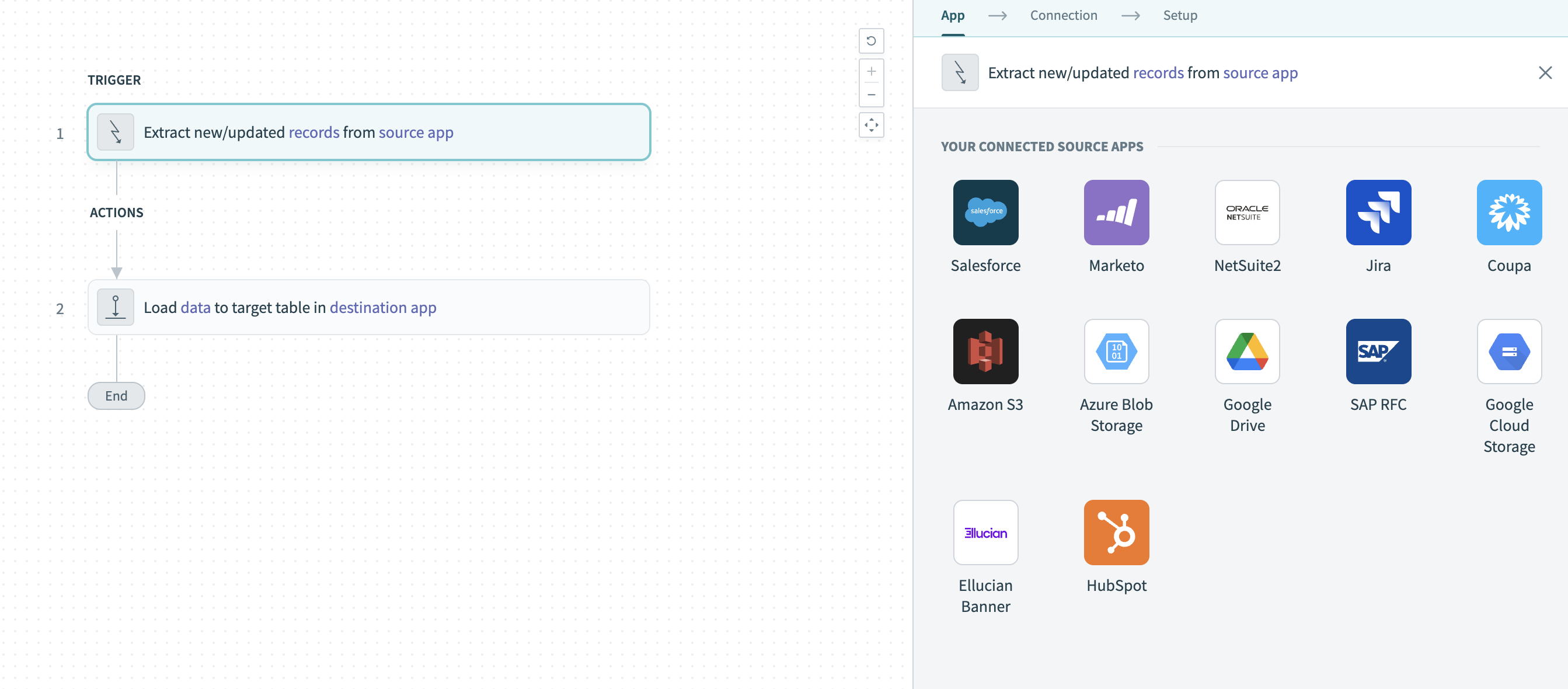

Click the Extract new/updated records from source app trigger. This trigger defines how the pipeline retrieves data from the source application.

Configure the Extract new/updated records from source app trigger

Configure the Extract new/updated records from source app trigger

Select Google Cloud Storage from Your Connected Source Apps.

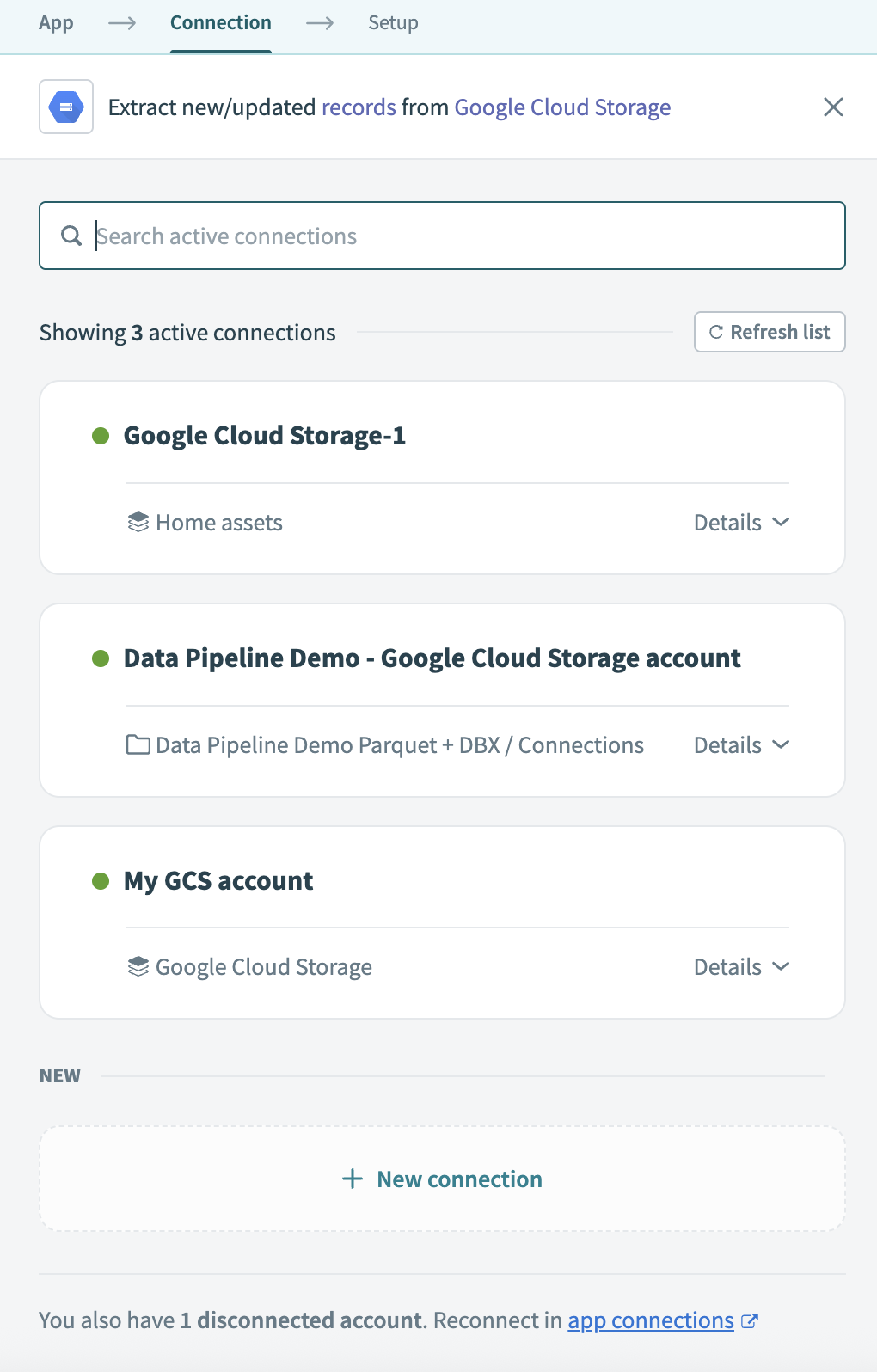

Choose the Google Cloud Storage connection you plan to use for this pipeline. Alternatively, click + New connection to create a new connection.

Choose a Google Cloud Storage connection

Choose a Google Cloud Storage connection

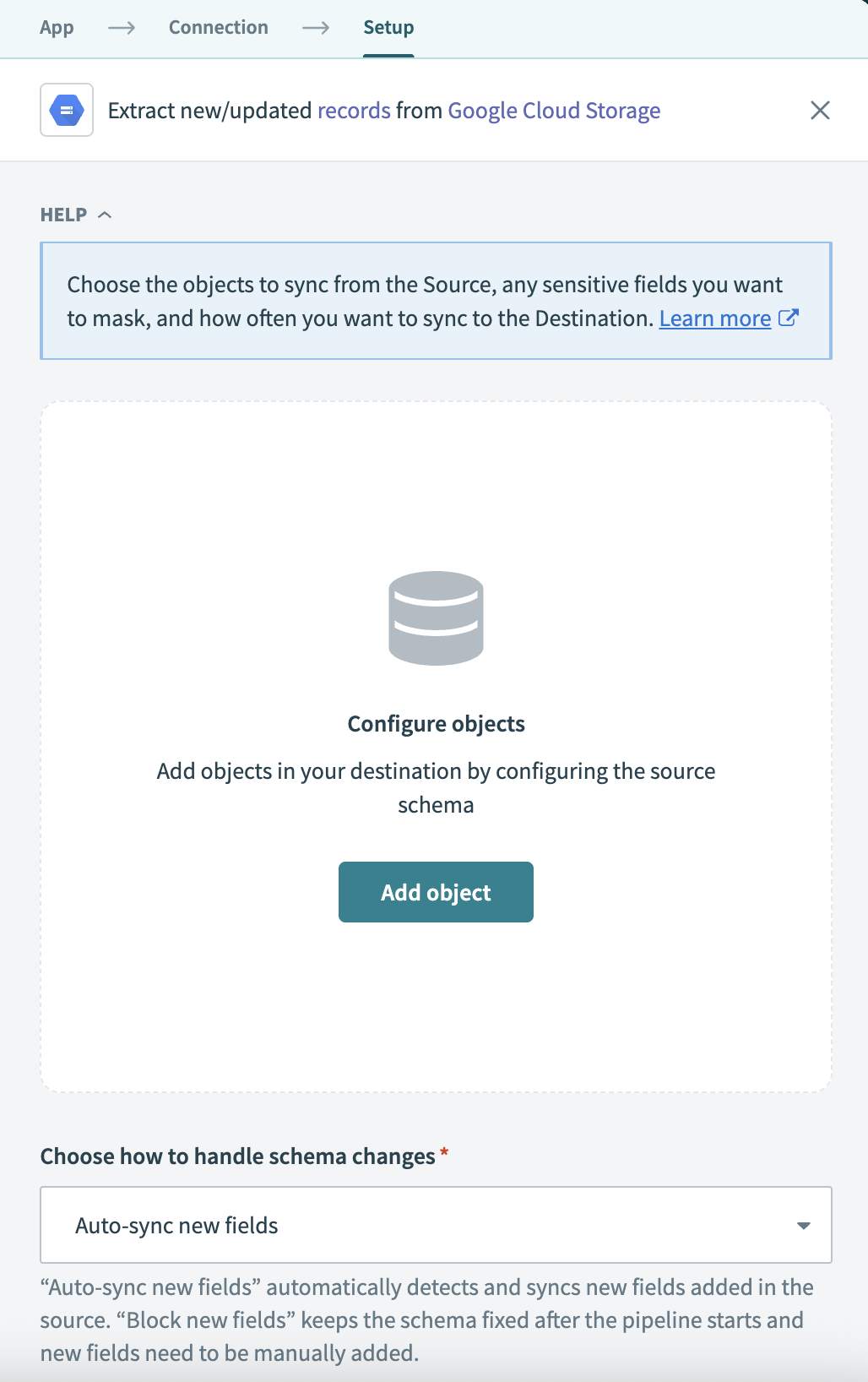

Click Add object to configure the files the pipeline monitors and syncs.

Add Google Cloud Storage objects

Add Google Cloud Storage objects

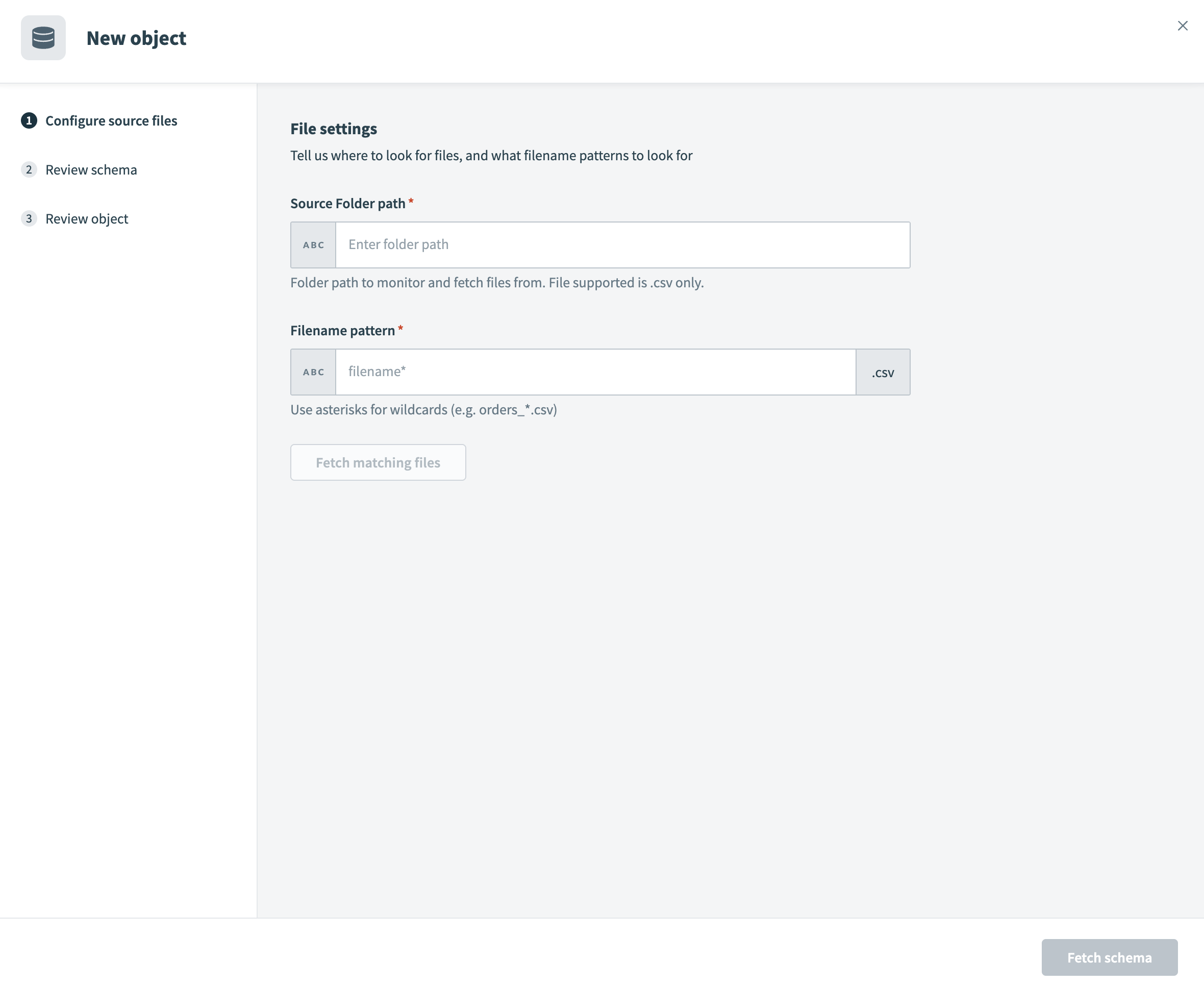

Enter the folder within the bucket to monitor in the Source Folder path field. The pipeline supports .csv files only.

Configure file settings

Configure file settings

Define which files to fetch using a pattern in the Filename pattern field. Use wildcards such as orders_*.csv to include multiple files.

Click Fetch matching files to preview files matching the defined pattern.

Select a Reference file to define the schema for your destination table.

Configure CSV settings:

Set whether the CSV includes a header in the Does CSV file include a header line? field.

Choose a delimiter in the Column delimiter field.

Set the Force quotes field to True to quote all values.

Click Fetch schema to load and preview columns from the reference file.

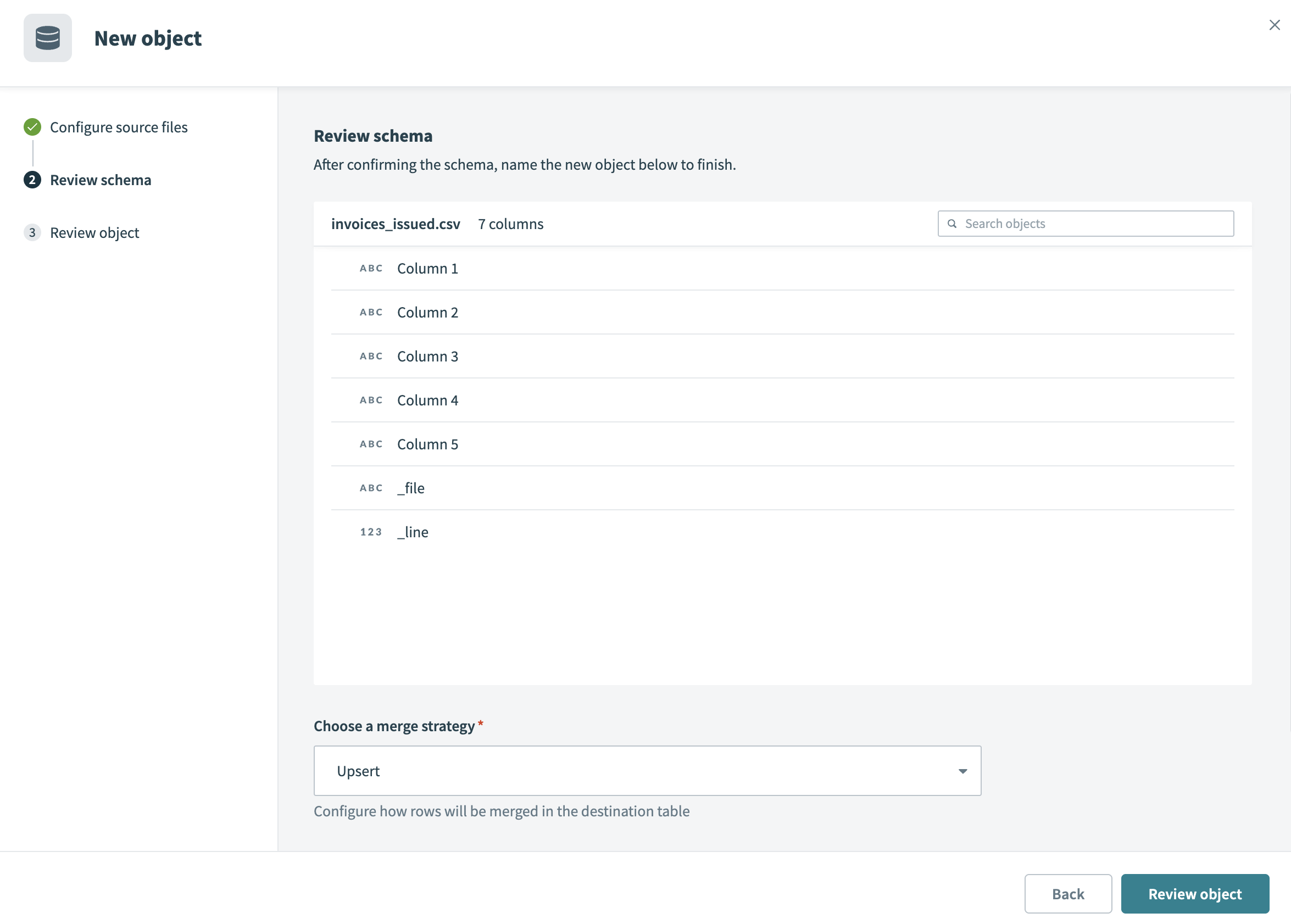

Review the schema to ensure it matches your expected table structure.

Review schema

Review schema

Configure how rows are merged in the destination table in the Choose a merge strategy field. Workato supports the following merge strategies:

- Upsert: Inserts new rows and updates existing rows. When you choose Upsert, the Merge method field appears. You must select a column that uniquely identifies each row. This key is used to determine whether a row exists in the destination and whether it's updated or inserted.

- Append only: Inserts all rows without attempting to match or update existing records. When you choose Append only, the pipeline doesn't match on a key and doesn't update existing rows.

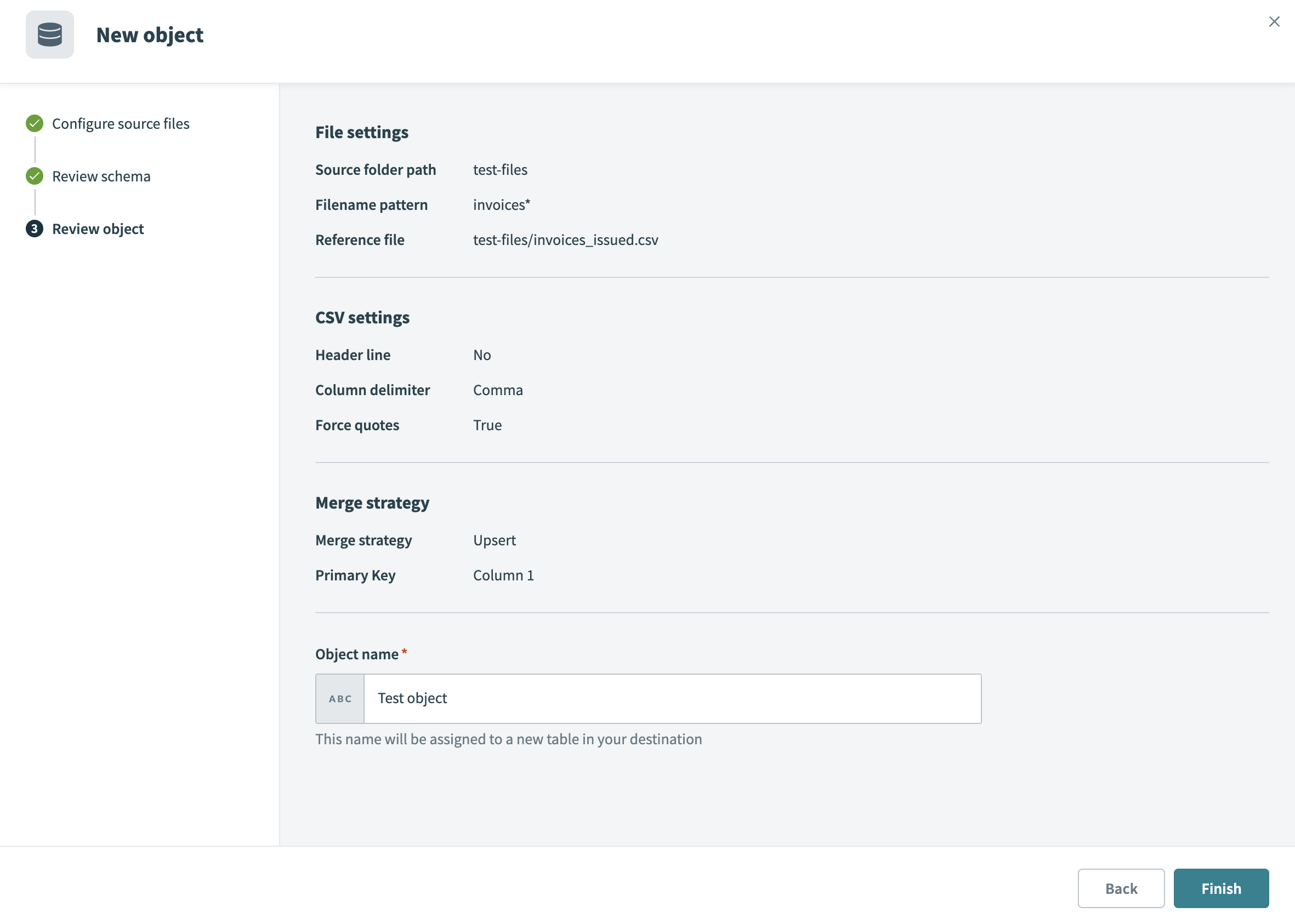

Click Review object to confirm your setup. This screen displays your file settings, CSV options, and merge details.

Review object

Review object

Enter an Object name. This name defines the destination table name.

Click Finish to save the object configuration.

Review and customize the schema for each selected object. When you select an object, the pipeline automatically fetches its schema to ensure the destination matches the source.

Expand any object to view its fields. Keep all fields selected to extract all available data, or deselect specific fields to exclude them from data extraction and schema replication.

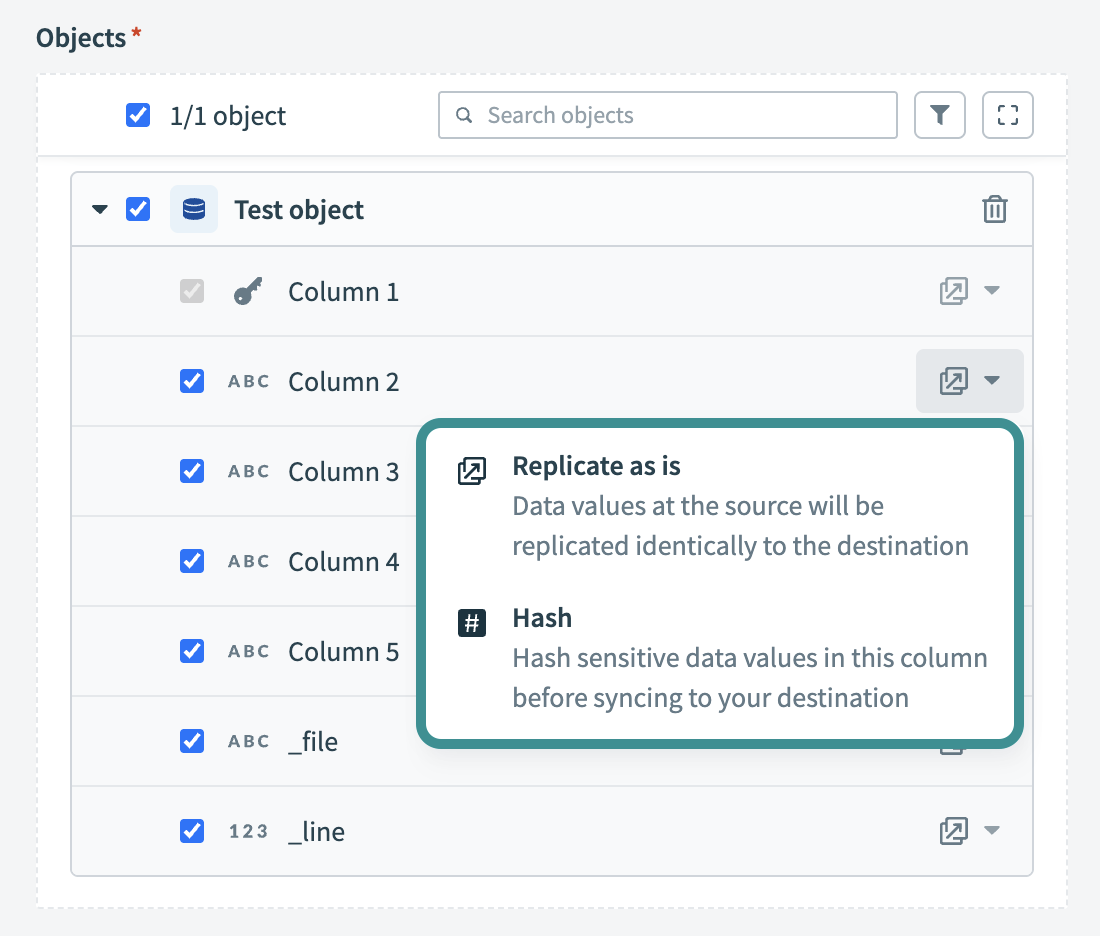

Optional. Configure field-level data protection. After you expand an object, choose how to handle each field:

- Replicate as is (default): Data values at the source are replicated identically to the destination.

- Hash: Hash sensitive data values in the column before syncing to your destination.

Configure field-level data protection

Configure field-level data protection

Click Add object again to add more objects using the same flow. You can repeat this step to include multiple Google Cloud Storage objects in your pipeline.

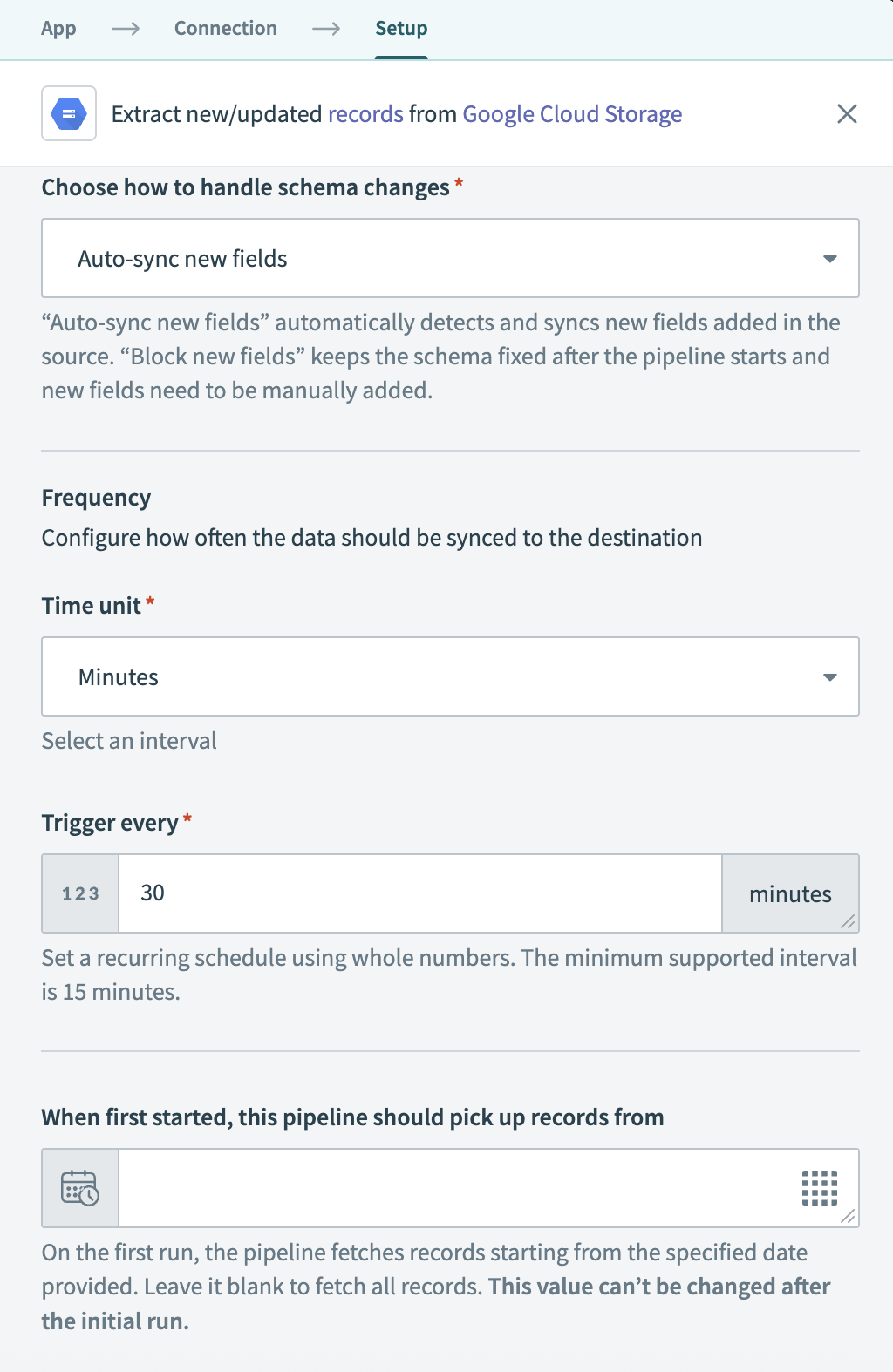

Choose how to handle schema changes:

- Select Auto-sync new fields to detect and apply schema changes automatically.

- Select Block new fields to manage schema changes manually. This option may cause the destination to fall out of sync if the source schema updates.

Schema drift management is currently not supported for file-based pipelines. Support for automatic schema updates is planned for a future release.

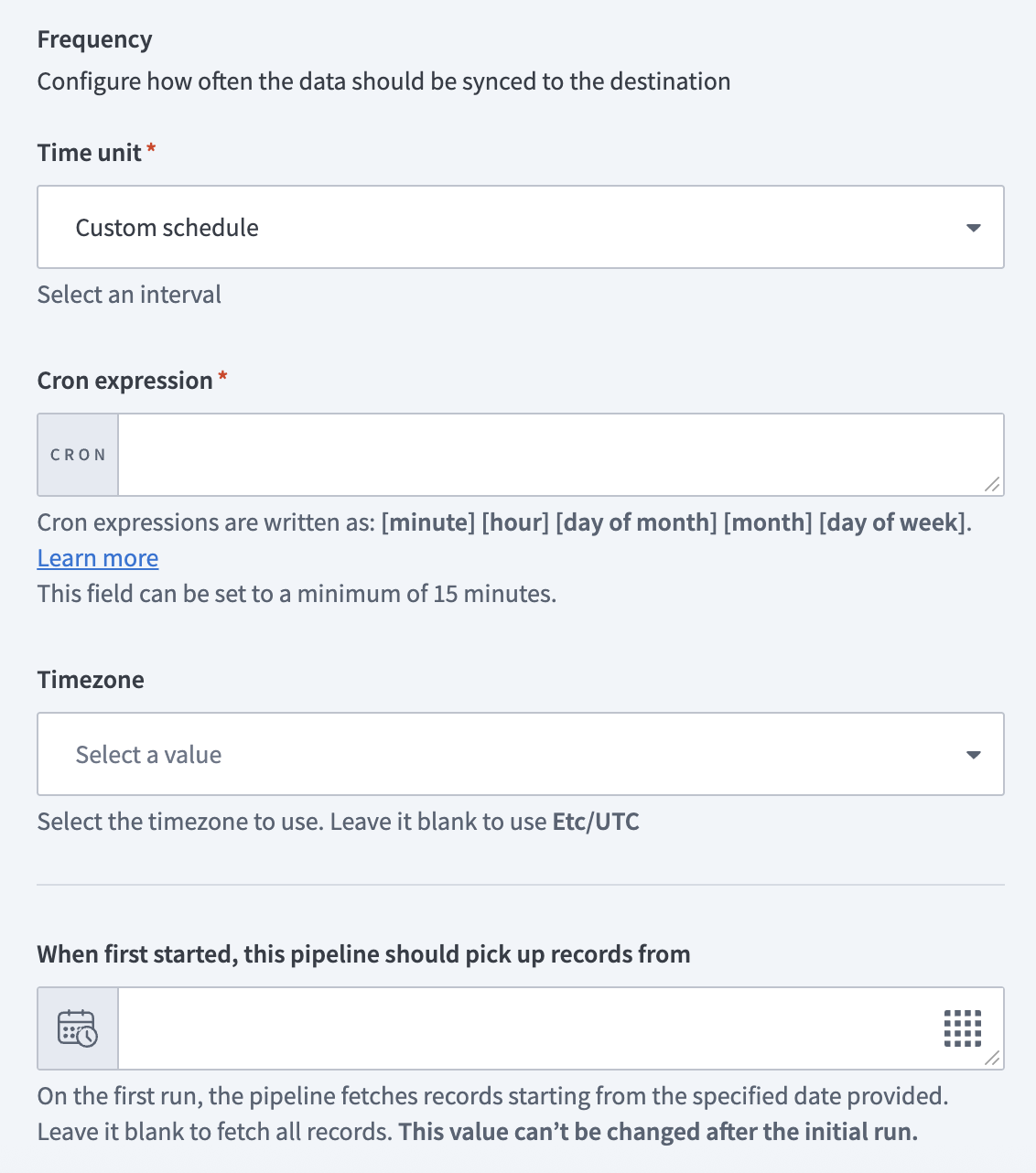

Configure how often the pipeline syncs data from the source to the destination in the Frequency field. Choose either a standard time-based schedule or define a custom cron expression.

# File schema and processing

The Google Cloud Storage connector reads .csv files stored in buckets you specify. These files define the structure and data the pipeline extracts and syncs to your destination.

Workato infers schema and data types from the selected reference file. All files matched by the file pattern must maintain the same structure to ensure accurate mapping.

Last updated: 2/9/2026, 10:12:02 PM

Configure sync frequency

Configure sync frequency Configure sync frequency

Configure sync frequency